It’s the middle of 2024 and our world is all AI, all the time. AI dominates tech news and the financial world. Giant companies are spending unbelievable amounts of money to improve AI technology and bulk up their server capacity. Nvidia – the company that makes the chips best suited to running AI – is a three trillion dollar company. In the last few months it has occasionally passed Apple and Microsoft to become the most valuable company in the universe.

The AI boom sucked all the oxygen from the room. Virtual reality headsets – Meta Quest 3, Apple Vision Pro – are still selling modestly but they’ve completely left the conversation.

Then there are the nearly invisible devices. These are the ones that look like sunglasses but do some interesting tech tricks. We haven’t settled on a name for them yet. “AI enabled glasses.” “AR glasses.” “Holographic AR glasses.” Let’s call them “smart glasses” for now.

I keep telling you that smart glasses are going to be a big deal but you can be excused if that feels imaginary. You probably haven’t heard anything about them other than from me.

And yet smart glasses are advancing down the field, a few feet at a time.

You can buy glasses today in two distinct categories:

Glasses with AI Glasses that do not display anything interesting for you to see, they’re just sunglasses – but they have AI built in to respond to questions, along with a camera and little tiny speakers next to your ears. I’ll tell you about those below.

Glasses that display a large virtual screen Glasses that don’t have any AI – really, no brain at all – but they can display what appears to be a large screen or two hanging in the air in front of you. These also have speakers in the arms, but no camera. They’re perfect for watching movies. There is a lot of activity in this category – new models, better visuals, more access to apps. Take a look at the latest from Rokid and Xreal if you’re interested.

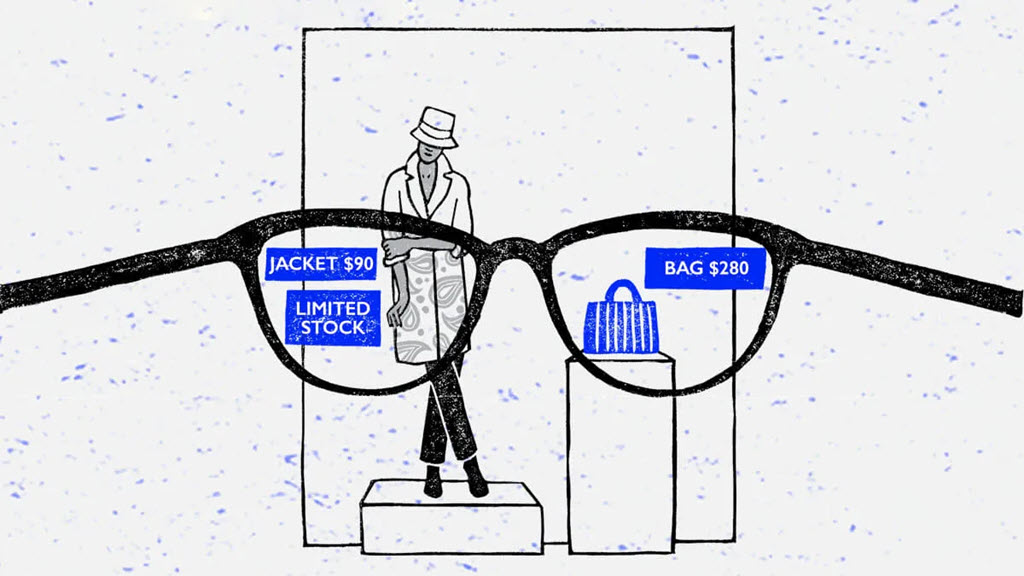

Either of these categories might give you much happiness today, but they are stepping stones on the way to the real goal, which is glasses that have built-in AI and visual displays or text hanging in the air. Recently Mark Zuckerberg dropped more hints that we might see the first public demo of those before long.

Ray-Ban Meta Smart Glasses were introduced in October 2023. They have a built-in camera for instant access to pretty good pictures and videos; decent speakers in the stems for music, podcasts, and hearing conversations and answers to questions; and a microphone for conversations and asking questions.

The Meta glasses also let you talk to an AI assistant with no fuss. When you ask a question, the glasses send it to your phone via bluetooth; the phone sends it to Meta’s servers; and the response is spoken out loud in your ear. It’s seamless and fast.

You could ask anything that might otherwise be a Google search or an AI question, but the glasses can also work with what the glasses can see or hear. So you can ask for walking directions and the glasses will tell you to turn when you get to the intersection; they can identify trees and flowers that you’re looking at; you can ask for price comparisons on an object in your hand; and they can translate words spoken to you.

Does it work? See below.

There are some controls done with taps and gestures, but saying “Hey Meta” will also get the attention of the glasses, exactly like “Hey Google” or “Alexa” or “Hey Siri.”

Meta started with the classic Ray-Ban Wayfarers but has just introduced some new styles if you’d rather have cat-eye frames.

Surprising sales success

Sales numbers aren’t available but Meta’s smart glasses have been “a much bigger success than we expected,” according to a staff memo. Last week Meta restructured its internal divisions to focus on Wearables like the glasses.

Meta’s hardware manufacturer, EssilorLuxotica, increased production to meet demand for the Meta glasses, and is being approached by other giant tech companies interested in developing their own smart glasses.

The first competitors will appear shortly. This week Solos announced AirGo Vision, smart glasses that look and work quite a lot like Meta’s glasses, but use GPT-4o for the AI features. That’s the chatty assistant from OpenAI which was introduced with Scarlett Johanssen’s voice even though she had said, no, don’t use my voice. It was an unforced error that completely overshadowed the actual AI capabilities, which were very impressive. OpenAI spent roughly 17 trillion dollars on PR trying to back away from the Scarlett controversy and has just withdrawn the voice mode completely for a month for “improvements.”

AI in smart glasses is spotty but improving

Smart glasses are for tech enthusiasts and early adopters, sure, but those early users love them, and the AI features are improving so quickly that mainstream acceptance may come more quickly than you expect.

Examples: Wired – “My Meta Ray-Ban Wayfarers and I have grown inseparable”. Forbes – “How Meta Ray Ban Smart Glasses Displaced My Smartphone On Opening Day.” TechRadar – “I finally tried the Meta AI in my Ray-Ban smart glasses – and it’s by far the best AI wearable.”

If you’re curious but you’re not a tech enthusiast, you might want to wait, because all of those reviews (and all the others I’ve read) concede that the AI assistance is handy but obviously still at a very early stage. Answers are sometimes vague or disappointing. Translation is still mostly a dream. The glasses are not yet tied into GPS for directions. The AI features will improve rapidly but today, July 2024, they are mostly a gimmick and a glimpse of the future.

But bear in mind that Ray-Ban Wayfarers are typically $150 or more. The Meta Ray-Bans are $299. So a small amount of extra money gets instant photos and videos and the equivalent of decent earbuds, plus an early look at what it’s like to have an AI assistant. That’s a pretty good deal. Even the non-AI features are rapidly improving – last week Meta updated the glasses to permit videos up to three minutes long, increased from the original one minute limit.

The future is so bright that we all might want to wear shades before long.